Formal linguistics and functional explanation: Bridging the gap

Frederick J. Newmeyer

[Články]

Formální lingvistika a funkční explanace: Přemostění mezery

1. Two orientations to the study of grammar

All normal human beings have a cognitive system called a ‘grammar’ that encodes the syntactic, phonological, and semantic properties of their language.[1] This system underlies our ability to use language in conversation and as a vehicle for rational thought. The obvious question to raise is to what extent the properties of this system have been shaped by its function. There are a bewildering variety of answers to this question, which run the spectrum from ‘function has affected structure profoundly’ to ‘function probably has not affected structure at all’. Unfortunately the answers that one hears to this question are often more a consequence of one’s research commitments and positions on a variety of other topics than of empirical evidence bearing directly on the question. In particular, the answer is typically a function of one’s orientation to linguistic theory in general.

It seems reasonable, then, to begin by identifying the orientations to the study of language. Broadly speaking, there are really just two. The first, we can call the ‘formalist’ or ‘generative’ orientation (the terms are not synonymous, but for our purposes the differences are not significant). What characterizes this orientation is a set of autonomy positions of varying degrees of strength. The weakest is the autonomy of grammar:

| (1) | The Autonomy of Grammar: At the heart of language is a structural system with formal principles relating sound and meaning. |

This view sees the grammar as a whole as a discrete semiotic system, in which form and meaning are interrelated. The idea of the autonomy of grammar was bequeathed to the field by Ferdinand de Saussure in the early twentieth century.

The strongest formalist position is that of the autonomy of syntax:

| (2) | The Autonomy of Syntax: The syntactic rules of a language make no reference to meaning, discourse, or language use. |

That is, the distribution of syntactic elements is governed by own algebra and these principles make no reference to the semantic or pragmatic properties of the formal elements. In the remainder of this paper I will assume that the formalist orientation entails the stronger position as well as the weaker.

The other orientation in the field is the ‘functionalist’ one. William Croft expresses the goals of functionalism succinctly:

|

| Functionalism seeks to explain language structure in terms of language function. It assumes that a large class of fundamental linguistic phenomena are the result of the adaptation of grammatical structure to the function of language (Croft, 1990, p. 155). |

[82]All functionalist models reject the autonomy of syntax on the grounds that the function of conveying meaning (in its broadest sense) has so affected grammatical form that it is senseless to separate form from its semantic and pragmatic determinants. However, some functionalist approaches accept the autonomy of grammar, while others reject it. In the former camp one finds mainstream Praguean work, from that of Mathesius 1927/1983 to Functional Generative Description (Sgall, Hajičová and Panevová, 1986); certain frameworks heavily indebted to Praguean work, such as Functional Grammar (Dik, 1989) and Systemic Functional Grammar (Halliday, 1985), and other frameworks that developed independently, such as Role-and-Reference Grammar (Van Valin, 1993) and Cognitive Grammar (Langacker, 1987). In the latter camp, one finds mainstream American functionalism (Bybee, Perkins and Pagliuca, 1994; Hopper and Thompson, 1984) and most approaches according ‘grammaticalization’ a central theoretical role (Heine, Claudi and Hünnemeyer, 1991).

The question to be addressed in this paper is whether the adoption of the autonomy of syntax precludes any hope of providing functional explanations for why grammatical systems have the properties that they have. The following quote from two prominent functionalists expresses succinctly the view that autonomy does preclude functional explanation:

|

| The autonomy of syntax cuts off [sentence structure] from the pressures of communicative function. In the [formalist] vision, language is pure and autonomous, unconstrained and unshaped by purpose or function (Bates and MacWhinney, 1989, p. 5). |

The goal of this paper is to argue that the autonomy of syntax and functional explanation are not incompatible. The argument will take the following form. First, in §2, it will be pointed out that in other disciplines no one perceives an incongruity about the properties of some formal system within their domain of inquiry being explained in terms of their use or function. The paper will then go on (in §3) to discuss the sorts of phenomena that suggest that the idea of autonomous syntax is correct. Section 4 will defend the idea that certain properties of syntactic systems do have a functional motivation based ultimately in language use. The most convincing functional explanations appeal to pressure to allow language to be spoken and understood rapidly and to pressure to keep form and meaning close to each other. Section 5 will outline reasonable criteria for identifying convincing functional explanations and be followed by some general remarks on autonomy and functional explanation (§6) and a few highly speculative ideas on the mechanism by which grammatical systems may be shaped by external forces (§7). Section 8 is a brief conclusion.

2. Autonomy and functional explanation

Let us begin with a point that one could practically call a point of logic. Statements like the one from Bates and MacWhinney seem to take it for granted that once a system is characterized as autonomous, functional explanation of that system (or its properties) is impossible. Such is simply not true; oddly it seems to be only linguists who have this curious idea. In other domains, formal and functional explanation are taken as comple[83]mentary, not contradictory, as a look at a couple other formal systems will indicate. A good example of a formal system is the game of chess. Chess is an autonomous system, in the sense that it comprises a finite number of discrete rules. Given the layout of the board and the pieces and their permissible moves, it is possible to ‘generate’ every chess game. But usage-based considerations went into design of system. Namely it was designed in such a way as to ensure that it would be a satisfying pastime. And usage-based considerations can change the system. However unlikely, a decree from International Chess Authority, for example, could prevent rooks from advancing more than four squares, on the grounds that the pleasure of the game would be thereby enhanced. Furthermore, in any actual game of chess, the moves are subject to the conscious will of the players, just as any act of speaking is a product of the (normally) conscious decision of the speaker. In other words, chess is both autonomous and explained functionally.

To take a more biological analogy, consider any bodily organ, say, the liver. The liver is an autonomous structural system in the sense that it can be characterized in terms of its component cells and their properties. Nevertheless, it has still been shaped by its function and use. The liver evolved in response to selective pressure for a more efficient role in digestion. And the structure of the liver can be affected by external factors. A lifetime of heavy drinking can change it profoundly (and for the worse).

The question, then, is whether grammar in general and syntax in particular are in relevant respects like the game of chess and like our bodily organs. The answer to this question, as we will see, is an unambiguous ‘yes’.

3. In defense of the autonomy of syntax

What sorts of facts, then, bear on whether syntax is autonomous? One extreme position is advocated by certain formalists, who think that all that is required is to demonstrate some mismatch between form and meaning or form and function. But if that were sufficient, then every linguist would believe in autonomous syntax. It is easy to come up with mismatches. The following examples (from Hudson et al., 1996) illustrate:

| (3) | a. | He is likely to be late. |

|

| b. | *He is probable to be late, (likely, but not probable, allows raising) |

| (4) | a. | He allowed the rope to go slack. |

|

| b. | *He let the rope to go slack. (let doesn’t take the infinitive marker) |

| (5) | a. | He isn’t sufficiently tall. |

|

| b. | *He isn’t enough tall. / He isn’t tall enough. (enough is the only degree modifier that occurs postadjectivally) |

Defending the autonomy of syntax, then, clearly involves more than showing the existence of mismatches between form and meaning.

Many functionalists take the opposite extreme and believe that one can refute the autonomy of syntax simply by showing that form is in a systematic relationship with meaning and function. Consider:

|

| Crucial evidence for choosing a functionalist over a traditional Chomskian formalist approach [embodying the autonomy of syntax — FJN] would minimally be any [84]language in which a rule-governed relationship exists between discourse/cognitive functions and linear order. Such languages clearly exist (Payne, 1998, p. 155). |

But all generative theories posit a rule-governed relationship between syntactic and semantic structure. There would be no ‘autonomous syntacticians’ if, in order to qualify as one, one had to reject rules linking form and meaning.

What is involved, then, in establishing the correctness of the autonomy of syntax? In a nutshell, it is necessary to establish the correctness of the following two hypotheses:

| (6) | a. | There exists an extensive set of purely formal generalizations orthogonal to generalizations governing meaning or discourse. |

|

| b. | These generalizations ‘interlock’ in a system. |

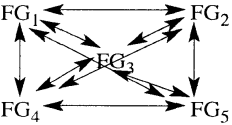

In other words, a full defense of the autonomy of syntax involves not merely pointing to extensive formal generalizations, but showing that these generalizations interact in a manner graphically illustrated in (7) (where ‘FG’ stands for ‘formal generalization’):

| (7) | |

It is considerably easier, of course, to illustrate (6a) than (6b). Due to space constraints, only one example will be provided, namely structures in English with displaced wh-phrases, as in (8–11):

| Wh-constructions in English: | |

|

| Questions: |

| (8) | Who did you see? |

|

| Relative Clauses: |

| (9) | The woman who I saw |

|

| Free Relatives: |

| (10) | I’ll buy what(ever) you are selling. |

|

| Wh (Pseudo) Clefts: |

| (11) | What John lost was his keys. |

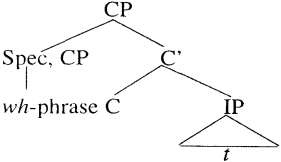

We have here a profound mismatch between syntactic form and the semantic and discourse properties associated with that form. In each construction type, the displaced wh-phrase occupies the same structural position, namely, the left margin of the phrase immediately dominating the rest of the sentence (in Principles-and-Parameters terminology, the ‘Specifier of CP’):

| (12) | |

[85]Despite their structural parallelism, the wh-phrases in the four constructions differ from each other in both semantic and discourse function. As far as their semantics is concerned, one frequently reads that it is the (semantic) function of a fronted wh-phrase to set up an operator-variable configuration or to act as a scope marker. But only in the least complex cases is such the case. Consider simple wh-questions, for example. In (13) what does indeed behave as a semantic operator and as a scope marker:

| (13) | What did Mary eat? = for what x, Mary ate x |

But the full range of wh-constructions gives no support to the idea that the semantic role of the attracting feature in general is to set up operator-variable relations. So in (14), for example, with an appositive relative clause, there is no operator-variable configuration corresponding to trace and antecedent:

| (14) | Ralph Nader, who several million Americans voted for, lost the election. |

By way of confirmation, as pointed out by Lasnik and Stowell, 1991, there is no weak crossover effect with appositive relatives:

| (15) | a. | Geraldi, whoi hisi mother loves ti, is a nice guy. |

|

| b. | This booki, whichi itsi author wrote ti last week, is a hit. |

Nor does the fronted wh-element reliably act as a scope marker. Consider partial Wh-Movement in German and Romani (McDaniel, 1989):

| (16) | Wasi | glaubt | [IPHans | [CP mit wem]i | [IP Jakob jetzt ti | spricht]]? | ||

|

| what | think | Hans | with who | Jacob now | speak | ||

|

| ‘With whom does Hans believe Jacob is now talking?’ | |||||||

| (17) | Soi | [IP o | Demìri mislinol [CP [kas]i [IP I Arìfa dikhl’a ti]]] | |||||

|

| what | that | Demir think who the Arif saw | |||||

|

| ‘Whom does Demir think Arifa saw?’ | |||||||

The same point can be made in cases of multiple wh-phrases, where one is left in situ:

| (18) | Who remembers where we bought which book? (Baker, 1970) |

There are two interpretations, in neither of which the wh-phrase in SPEC of CP acts as a scope marker:

| (19) | Two interpretations of (18) (Chomsky, 1973, p. 283): |

|

| a. For which x, x remembers for which z, for which y, we bought y at z |

|

| b. For which x, for which y, x remembers for which z we bought y at z |

Indeed, Denham, 2000, argues persuasively that Wh-Movement and scope marking are completely dissociated in UG.

Furthermore, fronted wh-phrases in different constructions play very roles in terms of information structure. In simple wh-questions, the sentence-initial wh-phrase serves to focus the request for a piece of new information, where the entire clause is presupposed except for a single element (Givón, 1990). Fronting in relative clauses, however, [86]has nothing to do with focusing. Haiman, 1985, suggests that the iconic principle, ‘Ideas that are closely connected tend to be placed together’, is responsible for the adjacency of the relative pronoun to the head noun. In free relatives, the fronted wh-phrase actually fulfills the semantic functions of the missing head noun. Semantically, the fronted wh-phrase in pseudo-clefts is different still. As Prince, 1978, argues, the clause in which the wh-phrase is fronted represents information that the speaker can assume that the hearer is thinking about. But the function of the wh-phrase itself is not to elicit new information (as is the case with such phrases in questions), but rather to prepare the hearer for the focused (new) information in sentence-final position. In short, we have an easily characterizable structure manifesting a profound mismatch with meaning and function.[2]

We will now present supporting evidence for (6b), namely the idea that wh-constructions not only have an internal formal consistency, but they behave consistently within the broader structural system of English syntax. For example, in all four construction types, the wh-phrase can be indefinitely far from the gap:

| (20) | a. | Whoi did you ask Mary to tell John to see ___i? |

|

| b. | The woman whoi I asked Mary to tell John to see ___i |

|

| c. | I’ll buy what(ever)i you ask Mary to tell John to sell ___i. |

|

| d. | Whati John is afraid to tell Mary that he lost ___i is his keys. |

And as (21) shows, each wh-construction is subject to principle of Subjacency, informally stated as in (22):

| (21) | a. | *Whoi did you believe the claim that John saw ___i? |

|

| b. | *The woman whoi I believed the claim that John saw ___i |

|

| c. | *I’ll buy what(ever)i Mary believes the claim that John is willing to sell ___i. |

|

| d. | *Whati John believes the claim that Mary lost ___i is his keys. |

|

|

|

|

| (22) | Subjacency | |

|

| Prohibit any relationship between a displaced element and its gap if ‘the wrong kind’ of element intervenes between the two. | |

But Subjacency, as it turns out, constrains movement operations that involve no (overt) wh-element at all. For example, Subjacency accounts for the ungrammaticality of (23):

| (23) | *Mary is taller than I believe the claim that Susan is. |

In other words, the formal principles fronting wh-phrases interlock with the formal principle of Subjacency. One might try to subvert this argument by claiming that Subjacency is a purely functional principle, rather than an interlocking grammatical one. In fact, there is no doubt that the ultimate roots of Subjacency are a functional response to the pressure for efficient parsing (see Berwick and Weinberg, 1984; Newmeyer, [87]1991; Kirby, 1998). But over time, this parsing principle has become grammaticalized. Hence, there are any number of cases in which sentences that violate it are ungrammatical, even in the absence of parsing difficulty. Sentence (24a), a Subjacency violation, is ungrammatical, even though it is transparently easy to process. Minimally different (24b) does not violate Subjacency and is fully grammatical:

| a. | *What did you eat beans and? | |

|

| b. | What did you eat beans with? |

In other words, the wh-constructions of (8–11) are integrated into the structural system of English, of which Subjacency forms an integral part.

4. In defense of functional explanation

Let us now turn to explanations of grammatical structure based on language use. In fact, dozens of different external explanations have been appealed to in order to explain properties of grammatical structure. There is no space to mention them all, much less to evaluate them. However, I feel that it is fairly uncontroversial to single out the following three as the most appealed to of all in the functionalist literature:[3]

| (25) | a. | Parsing: There is pressure to shape grammar so the hearer can determine the structure of the sentence as rapidly as possible. |

|

| b. | (Structure-concept) iconicity: There is pressure to keep form and meaning as close to each other as possible. |

|

| c. | Information flow in discourse: There is pressure for the syntactic structure of a sentence to mirror the flow of information in discourse. |

It will now be argued that the first two have profoundly influenced syntactic structure, but the third only to a very small degree.

The most comprehensive attempt to demonstrate how parsing pressure has influenced grammatical structure is Hawkins, 1994. The central insight of this work is that it is in the interest of the hearer to recognize the syntactic groupings in a sentence as rapidly as possible. This preference is realized both in language use and in the grammar itself. As far as use is concerned, when speakers have the choice, they will more often than not follow the parser’s preference. And as far as grammars are concerned, their structures will to a considerable degree reflect parsing preferences.

Hawkins’s central principle is Early Immediate Constituents (EIC):

| (26) | Early Immediate Constituents (EIC). The hearer (and therefore the parsing mechanism) prefers orderings of elements that lead to the most rapid recognition possible of the structure of the sentence |

EIC explains why long (or heavy) elements tend to come after short (or light) ones in English. Note that (27b) sounds better than (27a) and that (28b) sounds better than (28a). The ‘heavy’ elements (in boldface) tend to follow ‘light’ elements (in italics):

| [88](27) | a. | ?I consider everybody who agrees with me and my disciples about the nature of the cosmos smart. |

|

| b. | I consider smart everybody who agrees with me and my disciples about the nature of the cosmos. |

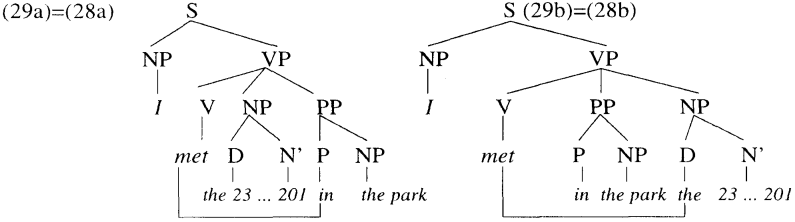

| (28) | a. | ?I met the twenty three people who I had taken Astronomy 201 with last semester in the park. |

|

| b. | I met in the park the twenty three people who I had taken Astronomy 201 with last semester. |

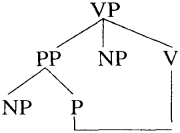

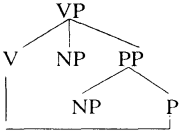

The structures of (28a–b) are depicted in (29a–b) respectively:

(28a) sounds worse than (28b), since it takes much longer to recognize the constituents of VP. Notice that the distance between V and PP in (29a) is much longer than the distance between V and NP in (29b).

EIC yields typological predictions too. In general, VO languages extrapose S’ subjects, while OV languages, like Japanese, tend to prepose S’ objects. In English discourse, the subject of (30a) will almost always be extraposed to (30b), while in Japanese discourse, the object of (31a) will frequently be preposed to (31b):

| (30) | a. | That John will leave is likely. | |||||

|

| b. | It is likely that John will leave. | |||||

| (31) | a. | Mary-ga | [kinoo | John-ga | kekkonsi-ta to] | it-ta | |

|

|

| Mary | yesterday | John | married that | said | |

|

|

| ‘Mary said that John got married yesterday’ | |||||

|

| b. | [kinoo | John-ga | kekkonsi-ta to] | Mary-ga it-ta | ||

EIC provides an explanation. By extraposing the sentential subject in English, the recognition domain for the main clause is dramatically shortened, as illustrated in (32a–b). By preposing the sentential object in Japanese, we find an analogous recognition domain shortening, as in (33a–b):

| (32) | a. | S[S’[ that S[ John will leave]] VP[is likely]] |

|

|

| | | |

|

|

|

|

|

| b. | S[ NP [it] VP[ is likely S’[that S[John will leave]]]] |

|

|

| | | |

|

|

|

|

| (33) | a. | S1[Mary-ga VP[S’[S2 [kinoo John-ga kekkonsi-ta] to] it-ta]] |

|

|

| | | |

|

|

|

|

|

| b. | S2[S’[S1[kinoo John-ga kekkonsi-ta] to] Mary-ga VP[it-ta]] |

|

|

| | | |

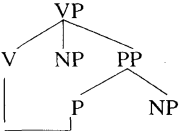

[89]EIC makes even more interesting predictions about competence, that is where we have grammaticalized orders, where the speaker has no choice about the positioning of phrases. For example, EIC explains why VO languages have prepositions and OV languages have postpositions. There are four logical possibilities, illustrated in (34a–d): VO and prepositional (34a); OV and postpositional (34b); VO and postpositional (34c); and OV and prepositional (34d):

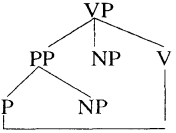

| (34) | a. | | b. | |

|

| VO and prepositional (common) |

| OV and postpositional (common) | |

|

|

|

|

|

|

|

| c. | | d. | |

|

| VO and postpositional (rare) |

| OV and prepositional (rare) | |

In (34a) and (34b), the two common structures, the recognition domain for the VP is just the distance between V and P, crossing over the object NP. But in (34c) and (34d), the uncommon structures, the recognition domain is longer, since it involves the object of the preposition as well.

Another important processing principle, Dependent Nodes Later, is also proposed in Hawkins, 1994:

| (35) | Dependent Nodes Later: If node B is dependent on node A for a property assignment, the processor prefers B to follow A. |

This principle can be illustrated by the cross-linguistic tendency for fillers to precede gaps — a tendency observed in wh-questions, relative clauses, control structures, and a wide variety of ‘deletion’ constructions. Dependent Nodes Later also provides a processing explanation for the generalizations that antecedents tend to precede anaphors, topics tend to precede predications (cf. Japanese wa), restrictive relative clauses tend to precede appositives, agents tend to precede patients, and quantifiers and other operators tend to precede elements within their scope.

Let us turn now to structure-concept iconicity. A theme in much functionalist writing is that language structure has to a considerable degree an ‘iconic’ motivation. For our purposes, that means that the form, length, complexity, or interrelationship of elements in a linguistic representation reflects the form, length, complexity or interrelationship of elements in the concept that that representation encodes. For example, it is well-known that syntactic units tend also to be conceptual units. Or consider the fact that lexical causatives (e.g. kill) tend to convey a more direct causation than pe[90]riphrastic causatives (e.g. cause to die). So, where cause and result are formally separated, conceptual distance is greater than when they are not. The grammar of possession also illustrates structure-concept iconicity. Many languages distinguish between alienable possession (e.g. John’s book) and inalienable possession (e.g. John’s heart). In no language is the grammatical distance between the possessor and the possessed greater for inalienable possession than for alienable possession.

The third type of usage-based explanation appeals to the flow of information in discourse. Such explanations start from fact that language used to communicate and communication involves the conveying of information. Therefore, it is argued, the nature of information flow should leave and has left its mark on grammatical structure. The great majority of functionalist work takes this idea for granted. Indeed, the tailoring of grammatical structure to the needs of discourse is the core idea of functionalism for many practitioners of that approach.

The communication of information has been appealed to, for example, in order to explain the ordering of the major elements within a clause. There are six ways to say ‘Lenin cites Marx’ in Russian, a typical so-called ‘free word-order’ language:

| (36) | a. | Lenin citiruet Marksa. |

|

| b. | Lenin Marksa citiruet. |

|

| c. | Citiruet Lenin Marksa. |

|

| d. | Citiruet Marksa Lenin. |

|

| e. | Marksa Lenin citiruet. |

|

| f. | Marksa citiruet Lenin. |

Each sentence is interpreted with the initial element representing old information and the final element representing new information. A functionalist claim pioneered many decades ago by the linguists of the Prague School is that discourse principle of Communicative Dynamism governs the ordering. The passage of time from past to present to future is mirrored iconically in discourse by the ordering of old information before new information (see, for example, Firbas, 1987).

5. Evaluating functional explanations

We have now discussed three much appealed to types of usage-based explanation; as noted, dozens more have been suggested in the literature. The question, then, is how one can be sure that one has identified a convincing manner in which language use might be said to have affected language structure. Three criteria for identifying a true functional influence on grammars seem particularly relevant. By these criteria, parsing and iconicity-based explanations do very well, but discourse-based ones rather poorly. Each criterion can be illustrated with reference to an uncontroversial external motivation for a structural change — cigarette smoking as a cause of cancer.

The first criterion is precise formulation. Clearly the smoking-cancer link meets this criterion. It is quite possible to gauge whether and how much people smoke. But the criterion rules out — at least for the time being — fuzzier ideas like state of mind, attitude toward life, and personality type as causes of cancer. For grammar, precise for[91]mulation immediately excludes ‘predispositions toward metaphor’, ‘speaker empathy’, ‘playfulness’, and many others suggested functional influences. The second criterion is demonstrable linkage between cause and effect. There is again no problem here with cigarette smoking and cancer. The effect of the components of smoke upon cells is well understood. Demonstrating linkage is much more difficult and more indirect, however, when we are dealing with cognitive structures than with anatomical structures. The third criterion is measurable typological consequences. It is well established, for example, that the more people smoke, the more likely they are to develop cancer.

By these criteria, only two candidates for external motivation of grammatical structure — parsing pressure (as embodied in EIC) and pressure for an iconic relationship between form and meaning — seem fully convincing.

Beginning with parsing, EIC meets all three criteria. First, Hawkins formulates it precisely (though its application depends on particular constituent structure assignments, which might be controversial). Second, there is demonstrable linkage. The advantage to parsing sentences rapidly can hardly be controversial. And we know that parsing is fast and efficient. Every word has to be picked out from ensemble of 50,000, identified in one-third of a second, and assigned to the right structure. Third, Hawkins presents literally dozens of typological predictions that follow from EIC.

What about pressure for structure-concept iconicity, that is for grammatical structure to reflect conceptual structure? Here the evidence is not as strong as for parsing pressure. It is (or at least can be) formulated precisely — most formal models of grammar build in a close relationship between form and meaning. The linkage required has its roots in processing as well. In brief, comprehension is made easier when syntactic units are isomorphic to units of meaning than when they are not. Experimental evidence shows that the semantic interpretation of a sentence proceeds on line as the syntactic constituents are recognized. The typological predictions have yet to be tested, though one assumes that they will be borne out.

Now let us consider information flow in discourse. What do we mean by that precisely? We know that there is a general tendency for old information to precede new information. The question is what we need to appeal to in order to explain this tendency. A long tradition sees it as somehow rooted in human nature that order of elements should be first old and then new. For example, Jan Firbas discusses the connection between old information and low degrees of communicative dynamism and new information and high degrees of communicative dynamism and goes on to write:

|

| Sentence linearity … makes the speaker/writer arrange the linguistic elements in a linear sequence, in a line, and develop the discourse step-by-step. I believe to be right in assuming that the most natural way of such gradual development is to begin at the beginning and proceed in a steady progression, by degrees, towards the fulfillment of the communicative purpose of the discourse. If this assumption is correct, then a sequence showing a gradual rise in degrees of CD (i.e. starting with the lowest degree and gradually passing on to the highest degree) can be regarded as displaying the basic distribution of CD. I also believe to be right in assuming [92]that this conclusion is quite in harmony with the character of human apprehension (Firbas, 1971, p. 138). |

In other words, human nature and the nature of discourse per se leads to old occurring before new. Much current functionalism shares this view, though without any commitment to the specifics of Praguean CD. In fact, one can make the case that old-before new is largely a processing effect. Logically speaking, new information is generally predicated of old information, and, as we have already seen, there is a processing principle that predications follow the elements that they are predications of. Following this principle has advantages for the speaker as well as for the hearer. As noted in Arnold et al., 2000, the production of an utterance requires the speaker to decide what to say, formulate the linguistic expressions to communicate the idea, and articulate the utterance. Such is all the more difficult if one is formulating something ‘new’. By postponing new and difficult elements, the speaker gives himself or herself much needed time.

In short, the structure of discourse may not be an independent variable. The order of units of information structure can be reduced to a significant extent to processing needs.

6. Some general remarks on autonomy and functional explanation

It is important to restress that the fact that the sentences of a language are best specified by autonomous rules and principles does not mean that external functional motivations were not involved in giving these constructions their shape. If we return to wh-constructions, it is patently obvious that their function has helped to shape their form. Some wh-constructions are operator-variable constructions; it is ‘natural’ that operators should precede the variables that they bind. The function of the wh-phrase in direct questions is to focus on a bit of missing information; it is natural that one would want to place this element at the beginning. The issue is not whether properties of wh-constructions are externally motivated or not. Certainly they are. The issue is whether in a synchronic grammar the formal properties of these constructions are best characterized independently of their meanings and the functions that they serve. And the answer is yes — they should be so characterized. The reason is that whatever functional considerations went into shaping a particular formal structure, that structure takes on a life of its own, so to speak, so it is no longer beholden to whatever functions brought it into being. In other words, the autonomous structural system takes over. For example, as Fodor, 1984, has pointed out, one cannot derive constraints just from parsing since there are sentences that are constraint violations that pose no parsing difficulty (see also examples 24a–b above):

| (37) | a. | *Who were you hoping for ___ to win the game? |

|

| b. | *What did the baby play with ____ and the rattle? |

and pairs of sentences of roughly equal ease to the parser, where one is grammatical and the other is a violation, as in (38a–b) and (39a–b):

| (38) | a. | *John tried for Mary to get along well with ___. |

|

| b. | John is too snobbish for Mary to get along well with ___. |

| [93](39) | a. | *The second question, that he couldn’t answer ___ satisfactorily was obvious. |

|

| b. | The second question, it was obvious that he couldn’t answer ___ satisfactorily. |

The structural system of English decides the grammaticality — not the parser. The point is that languages are filled with constructions that arose in the course of history to respond to some functional pressure, but, as the language as a whole changed, ceased to be very good responses to that original pressure. Rather, the functionally motivated structure generalizes and comes to encode meanings and functions that do not reflect the original pressure. Parsing ease, pressure for an iconic relationship between form and meaning, and so on really are forces that shape grammars. Adult speakers, in their use of language, are influenced by such factors to produce variant forms reflecting the influences of these forces. Children, in the process of acquisition, hear these variant forms and grammaticalize them. In that way, over time, certain functional influences leave their mark on grammars. But these influences operate at the level of language use and acquisition — and therefore language change — not internally to the grammar itself.

To give one example in support of this claim, consider the Modern English genitive. It may either precede or follow the noun it modifies:

| (40) | a. | GEN-N: Mary’s mother’s uncle’s lawyer |

|

| b. | N-GEN: the leg of the table |

The GEN-N ordering is unexpected, since English is otherwise almost wholly a rightbranching language. So why do English-speaking children acquire the GEN-N ordering? The short — and 100% correct answer — is ‘conventionality’. They learn that ordering because they detect it in the ambient language of their speech community. But the long answer is very interesting and drives home the great divide between the functional explanation of a grammatical change and force of conventionality that leads to the preservation of the effects of that change.

Old English 1000 years ago was largely left-branching with dominant orders of OV and GEN-N. This is the correlation motivated by parsing efficiency (Hawkins, 1994). The shift to VO order in the Middle English period was matched by a shift to N-GEN order. But then, after a certain time, everything started to reverse itself, with the text count of GEN-N order increasing dramatically. Why did this reversal occur? According to Kroch, 1994, and Rosenbach and Vezzosi, 2000, it may have been a result of the two genitives becoming ‘functionally differentiated’. The GEN-N construction became favored for animates while the N-GEN construction has tended to be reserved for inanimates. That idea has a lot to commend it. It is well known that there is a tendency for animates to occur earlier and inanimates later. Of course, has to be pointed out that we are talking only about tendencies here, since inanimates can occur in the GEN-N construction (41a) and animates in the N-GEN construction (41b):

| (41) | a. | The table's leg |

|

| b. | The treachery of the enemy. |

Now, then, what would the relation be between the rules and principles that license these two orders in Modern English and the functional motivations that gave rise to [94]them? The answer is that it is so indirect as to defy any simple relationship between form and function. At least three pressures are involved in maintaining the two genitive orders in Modern English: the pressure of conventionality, the pressure to have animate specifiers and inanimate complements, and purely structural pressure, caused by the existence of noun phrases with the structure [NP’s N] and [N of NP] where there is no semantic possession at all:

| (42) | a. | Tuesday’s lecture |

|

| b. | the proof of the theorem |

Complicating still further the possibility of any transparent relationship between form and function is the problem of multiple competing factors, pulling on grammars from different directions. As has often been observed, the existence of competing motivations poses the danger of rendering functional explanation vacuous. For example, consider two languages L1 and L2 and assume that L1 has property X and L2 has property Y, where X and Y are incompatible (i. e., no language can have both X and Y). Now assume that there exists one functional explanation (FUNEX1) that accounts for why a language might have X and another functional explanation (FUNEX2) that accounts for why a language might have Y. Can we say that the fact that L1 has property X is ‘explained’ by FUNEX1 or the fact that L2 has property Y is ‘explained’ by FUNEX2? Certainly not; those would be totally empty claims. Given the state of our knowledge about how function affects form, we have no non-circular means for attributing a particular property of a particular language to a particular functional factor. The best we can do is to characterize the general, typological influence of function on form.

But this situation is typical of what is encountered in external explanation. Consider cigarette smoking and lung cancer. We know that smoking is a cause of lung cancer. We also know that eating lots of leafy green vegetables helps to prevent it. Now, can we say with confidence that John Smith, a heavy smoker, has lung cancer because he smokes? Or can we say that Mary Jones, a non-smoker and big consumer of leafy green vegetables, does not have lung cancer for that reason? No, we cannot. Most individuals who smoke several packs a day will never develop lung cancer, while many nonsmoking vegetarians will develop that disease. To complicate things still further, most smokers are also consumers of leafy green vegetables, so both external factors are exerting an influence on them. The best we can do is to talk about populations.

The external factors affecting language far murkier than those affecting health. It would therefore be a serious mistake to entertain the idea of linking statements in particular grammars with functional motivations, as is the case in many autonomy-rejecting approaches. Rather, we need to set a more modest goal, namely, that of accounting for typological generalizations. But that is hardly an insignificant goal. If accomplished, it will achieved one of the central tasks facing theoretical linguistics today — coming to an understanding of the relationship between grammatical form and those external forces that help to shape that form.

[95]7. Why is syntax autonomous? – some speculative remarks

We will now offer some speculative remarks on why syntax is autonomous. After all, there is no logical necessity that it should be. We first observe that language serves many functions, which pull on it in many different directions. For this reason, most linguists agree that there can be no simple relationship between form and function. However, two functional forces do seem powerful enough to have ‘left their mark’ on grammar — the force pushing form and meaning into alignment (pressure for iconicity) and the force favoring the identification of the structure of the sentence as rapidly as possible (parsing pressure). Even these two pressures can conflict with each other, however — in some cases dramatically. Consider, for example, any case where there is parsing pressure to postpose a proper subpart of some semantic unit or where preference for topic before predication conflicts with pressure to have long-before-short, as in Japanese. The evolutionary problem, then, is to provide grammar with the degree of stability rendering it immune from the constant push-pull of conflicting forces. A natural solution to the problem is to provide language with a relatively stable core immune to the immanent pressure coming from all sides. That is, a natural solution is to embody language with a structural system at its core. Put another way, an autonomous syntax as an intermediate system between form and function is a clever design solution to the problem of how to make language both learnable and usable. This system allows language to be nonarbitrary enough to facilitate acquisition and use and yet stable enough not be pushed this way and that by the functional force of the moment.

To sum up in one sentence — autonomous syntax is functionally motivated.

8. Conclusion

The paper began by raising the question whether, despite a common feeling to the contrary, functional explanation and the autonomy of syntax are compatible. The answer is a resounding ‘yes’. The evidence points to the fact that syntactic systems are formulated without regard to the meanings or uses to which those systems are put. Yet at the same time, the properties of the systems themselves are, to a significant degree, shaped by those uses. Indeed, the property of autonomy itself might well have a functional explanation.

REFERENCES

BAKER, C. L.: Notes on the description of English questions: The role of an abstract question morpheme. Foundations of Language, 6, 1970, p. 197–219.

BATES, E. – MacWHINNEY, B.: Functionalism and the competition model. In: B. MacWhinney – E. Bates (eds.), The Crosslinguistic Study of Sentence Processing. Cambridge University Press, Cambridge 1989, p. 3–73.

BERWICK, R. C. – WEINBERG, A.: The Grammatical Basis of Linguistic Performance. MIT Press, Cambridge, Mass. 1984.

BYBEE, J. L. – PERKINS, R. D. – PAGLIUCA, W.: The Evolution of Grammar: Tense, Aspect, and Modality in the Language of the World. University of Chicago Press, Chicago 1994.

[96]CHOMSKY, N.: Conditions on transformations. In: S. Anderson – P. Kiparsky (eds.), A Festschrift for Morris Halle. Holt Rinehart & Winston, New York 1973, p. 232–286.

CROFT, W.: Typology and Universals. Cambridge University Press, Cambridge 1990.

DENHAM, K.: Optional wh-movement in Babine-Witsuwit’en. Natural Language and Linguistic Theory, 18, 2000, p. 199–251.

DIK, S. C.: The Theory of Functional Grammar; Part 1: The Structure of the Clause. Functional Grammar Series, Vol. 9. Foris, Dordrecht 1989.

FIRBAS, J.: On the concept of communicative dynamism in the theory of Functional Sentence Perspective. SPFFBU, A 19. Brno 1971, p. 135–144.

FIRBAS, J.: On the operation of communicative dynamism in Functional Sentence Perspective. Leuvense Bijdragen, 76, 1987, p. 289–304.

FODOR, J. D.: Constraints on gaps: Is the parser a significant influence? In: B. Butterworth – B. Comrie – Ö. Dahl (eds.), Explanations for Language Universals. Mouton, Berlin 1984, p. 9–34.

GIVÓN, T.: Syntax: A Functional-Typological Introduction. Vol 2. John Benjamins, Amsterdam 1990.

HAIMAN, J. (ed.): Iconicity in Syntax. Typological Studies in Language, Vol. 6. John Benjamins, Amsterdam 1985.

HALLIDAY, M. A. K.: An Introduction to Functional Grammar. Edward Arnold, London 1985.

HAWKINS, J. A.: A Performance Theory of Order and Constituency. Cambridge Studies in Linguistics. Vol. 73. Cambridge University Press, Cambridge 1994.

HEINE, B. – CLAUDI, U. – HÜNNEMEYER, F.: Grammaticalization: A Conceptual Framework. University of Chicago Press, Chicago 1991.

HOPPER, P. J. – THOMPSON, S. A.: The discourse basis for lexical categories in universal grammar. Language, 60, 1984, p. 703–752.

KIRBY, S.: Function, Selection and Innateness: The Emergence of Language Universals. Oxford University Press, Oxford 1998.

KROCH, A.: Morphosyntactic variation. In: K. Beals (ed.), Papers from the 30th Regional Meeting of the Chicago Linguistic Society. Chicago Linguistic Society, Chicago 1994, p. 180–201.

LANGACKER, R.: Foundations of Cognitive Grammar; Vol. 1: Theoretical Prerequisites. Stanford University Press, Stanford 1987.

LASNIK, H. – STOWELL, T. A.: Weakest crossover. Linguistic Inquiry, 22, p. 687–720.

McDANIEL, D.: Partial and multiple wh-movement. Natural Language and Linguistic Theory, 7, 1989, p. 565–604.

NEWMEYER, F. J.: Functional explanation in linguistics and the origins of language. Language and Communication, 11, 1991, p. 3–28.

NEWMEYER, F. J.: Language Form and Language Function. MIT Press, Cambridge, Mass. 1998.

NEWMEYER, F. J.: How language use can affect language structure. In: K. Inoue – N. Hasegawa (eds.), Linguistics and Interdisciplinary Research: Proceedings of the Coe International Symposium. Kanda University of International Studies, Chiba, Japan 2001, p. 189–209.

PAYNE, D. L.: What counts as explanation? A functionalist approach to word order. In: M. Darnell – E. Moravcsik – F. J. Newmeyer – M. Noonan – K. Wheatley (eds.), Functionalism and Formalism in Linguistics. John Benjamins, Amsterdam 1998, p. 135–164.

PRINCE, E.: A comparison of wh-clefs and it-clefs in discourse. Language, 54, 1978, p. 883–906.

ROSENBACH, A. – VEZZOSI, L.: Genitive constructions in early Modern English: New evidence from a corpus analysis. In: R. Sornicola – E. Poppe – Shisha-Halevy (eds.), Stability, Variation, and Change of Word Order over Time. John Benjamins, Amsterdam 2000, p. 285–307.

SGALL, P. – HAJIČOVÁ, E. – PANEVOVÁ, J.: The Meaning of the Sentence in Its Semantic and Pragmatic Aspects. Reider, Dordrecht 1986.

VAN VALIN, R. D.: A synopsis of role and reference grammar. In: R. D. Van Valin (ed.), Advances in Role and Reference Grammar. John Benjamins, Amsterdam 1993, p. 1–164.

[97]R É S U M É

Formální lingvistika a funkční explanace: Přemostění mezery

Otázku, jak dalece je gramatika utvářena svou funkcí, tedy tím, že lidem umožňuje užívat jazyk ke sdělování a při racionálním myšlení, lze zodpovědět, uvážíme-li, že autonomie syntaxe (právem předpokládaná formálním přístupem) nevylučuje vliv užívání jazyka na vlastnosti jeho gramatiky. Rozborem příkladů z problematiky slovosledu (včetně jeho funkcí daných aktuálním členěním) i strukturní ikoničnosti (aspoň částečné paralelnosti vztahů syntaktických a obsahových u kauzativ, u posesivity aj.) a tlaku relativní snadnosti syntaktické analýzy (rychlého porozumění vnímané větě) se ukazuje, že důsledky tohoto vlivu naprosto nejsou zanedbatelné. Během vývoje vznikají v jazycích nové konstrukce pod tlakem nových funkcí, postupně pak dochází k osamostatnění a zobecnění konstrukcí bez přímého vztahu k funkci. Takto stále znovu obnovovaná autonomie syntaxe tedy může mít funkční vysvětlení, podobně jako jiné vlastnosti gramatiky.

[1] Some of the material in this paper appeared originally in Newmeyer, 1998, and Newmeyer, 2001, and is reprinted here with the permission of M.I.T. Press and the Kanda University of International Studies respectively.

[2] One might suggest that the four wh-constructions of (8–11) do in fact share some semantic /pragmatic similarities. In each case, there is some element X, denoted by the extracted wh-phrase, and which is defined by the rest of the clause Y (as ‘the X of which Y is true’). While not incorrect, such a characterization does not distinguish wh-constructions from a multitude of other constructions, including those with fronted topic and focus phrases and even garden-variety active declarative sentences, in which a subject is followed by a predicate.

[3] ‘Economy’ and ‘frequency’ are also commonly cited as important functional motivations. I assume that they are not independent forces, but rather metaprinciples governing forces like (25a–c).

University of Washington

e-mail: fjn@u.washington.edu

Slovo a slovesnost, ročník 63 (2002), číslo 2, s. 81-97

Předchozí František Čermák: Český národní korpus se zpřístupňuje

Následující Ladislav Nebeský: Větné faktory a jejich podíl na analýze věty II

© 2011 – HTML 4.01 – CSS 2.1